Understanding MCP (Model Context Protocol) - What It Is, How It Works, and Why It Matters

In the rapidly evolving world of AI and large language models (LLMs), context management is becoming a cornerstone for building smarter, more efficient, and reliable applications. Enter MCP (Model Context Protocol) — a protocol designed to help developers and systems define, organize, and control the context passed into AI models. In this post, we’ll explore what MCP is, how it works, and real-world use cases that demonstrate its value.

🔍 What is MCP (Model Context Protocol)?

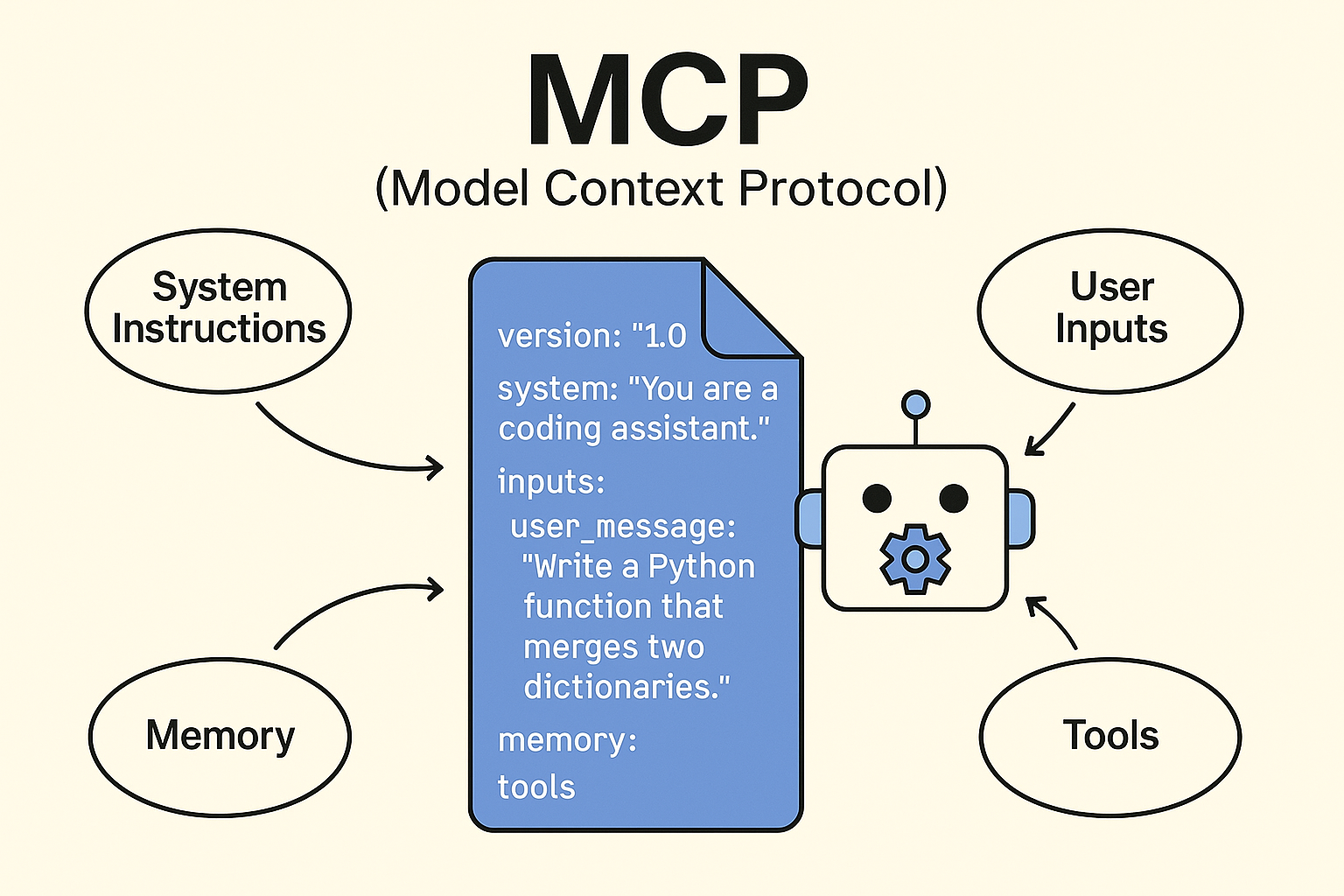

MCP, or Model Context Protocol, is an open protocol for specifying and managing the context that gets sent to language models. Context, in this sense, refers to the structured information provided to an LLM during inference—everything from user inputs and chat history to documents, tools, and system instructions.

MCP is part of a broader movement to standardize how AI applications interact with LLMs, making it easier to build reproducible, debuggable, and modular AI workflows.

Think of MCP as the equivalent of an API contract, but for LLM context. It tells the model what to expect, how to behave, and what tools it has access to—all in a consistent, declarative format.

⚙️ How MCP Works

MCP operates through a YAML-based declarative configuration, where the developer defines a context schema that the model runtime interprets. This configuration can include:

- System instructions: Base directives that set the model’s behavior (e.g., “You are a helpful assistant.”).

- Memory objects: Previous messages or facts the model should remember.

- Tools and functions: Descriptions of callable tools available to the model (like a calculator, API, or database).

- User inputs: Current prompts or queries from users.

- Artifacts: Structured data, like documents or JSON blobs, that the model should reference.

Each item in the MCP context is versioned and traceable, enabling better observability and debugging during LLM usage.

An example MCP file might look like:

version: "1.0"

system: "You are a coding assistant."

inputs:

user_message: "Write a Python function that merges two dictionaries."

memory:

- type: chat_history

content:

- role: user

content: "Hi, can you help me with Python?"

- role: assistant

content: "Sure! What do you need?"

tools:

- name: code_linter

description: "Lint and format Python code."

🧠 Why Use MCP?

As applications become more complex, prompt engineering becomes less scalable. Developers need:

- Modularity: Reuse context components like tools or user profiles across sessions.

- Auditability: Track what was sent to the model and why it responded the way it did.

- Interoperability: Use a shared context format across multiple model vendors or frameworks.

- Observability: Inspect and debug the actual context used during inference.

MCP helps solve these problems by separating context building from business logic, and promoting reproducibility.

✅ Example Use Cases

1. Agent-Based Applications

MCP enables advanced agents (like LangChain or Autogen) to clearly define toolkits, memory, and goals. You can declaratively list the tools available to the agent and pass them as structured context to the model runtime.

2. Enterprise Chatbots

In customer service, MCP can define rules, FAQs, and access control logic for LLMs. By declaring memory, user roles, and pre-approved documents, you ensure compliance and consistency.

3. Coding Assistants

By declaring available functions (e.g., a code executor or documentation fetcher), coding assistants can use tools intelligently without hardcoding them into the prompt.

4. Observability and Debugging

When models hallucinate or fail, MCP context logs provide full transparency into what information was available to the model—helping teams debug issues faster.

🚀 Final Thoughts

MCP is still evolving, but it’s already proving essential for production-grade LLM applications. By abstracting and standardizing model context, it empowers teams to build smarter, safer, and more maintainable AI systems.

Whether you're building a chatbot, an agentic system, or a retrieval-augmented generation (RAG) pipeline, embracing MCP can offer a cleaner, more scalable path forward.