Explaining JSON Web Tokens (JWT) - A Secure and Versatile Authentication Mechanism

In the rapidly evolving world of web development, the need for robust and secure authentication mechanisms has become paramount. JSON Web Tokens (JWT) have emerged as a popular solution, revolutionizing the way applications handle user authentication. In this blog post, we will delve into the fascinating world of JWTs, exploring their architecture, benefits, use cases, and best practices.

1. Understanding JWTs: What are they?

JSON Web Tokens, commonly referred to as JWTs, are compact and URL-safe tokens used for securely transmitting information between two parties. These tokens are represented as strings and are self-contained, meaning they carry all the necessary information within themselves, obviating the need for server-side storage.

2. How do JWTs work?

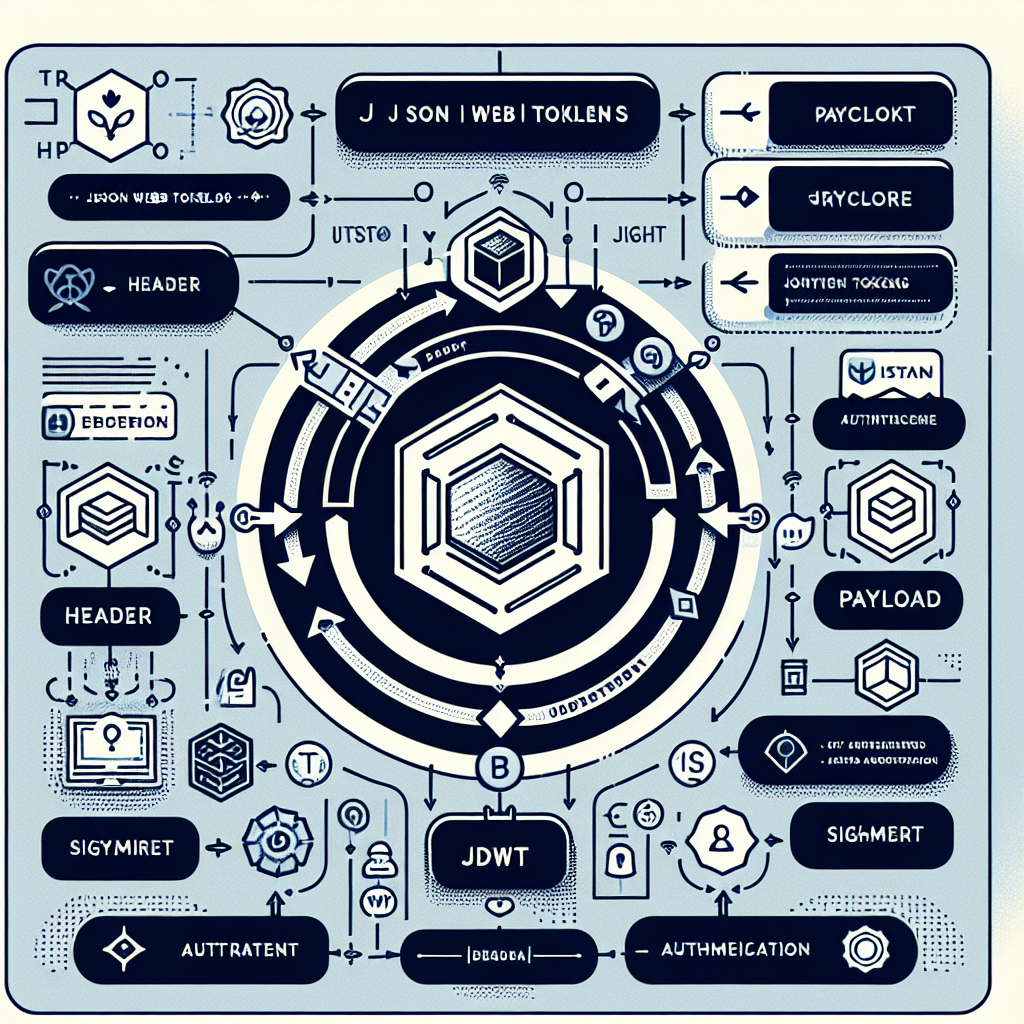

A JWT consists of three parts separated by dots: the header, the payload, and the signature. These parts are Base64Url-encoded and concatenated to form the JWT. Let's explore each part:

a. Header: The header typically consists of two parts: the type of token (JWT) and the signing algorithm used, such as HMAC SHA256 or RSA. It is important to note that the header is not encrypted, and its purpose is to provide information about the token to the recipient.

b. Payload: The payload contains the claims, which are statements about the user and additional data. There are three types of claims: registered, public, and private claims. The registered claims include standard fields like "iss" (issuer), "exp" (expiration time), "sub" (subject), and more. Public claims can be defined by those using JWTs, while private claims are meant to be custom and agreed upon by parties in advance.

c. Signature: The signature is generated by combining the encoded header, the encoded payload, and a secret (or private key) known only to the server. This ensures the integrity of the token and allows the recipient to verify that the token has not been tampered with.

3 . Benefits of using JWTs

a. Stateless: Unlike traditional session-based authentication systems, JWTs are stateless. The server doesn't need to store session information, resulting in reduced overhead and improved scalability.

b. Security: JWTs are signed, ensuring that the data within them remains tamper-proof. Additionally, they can be encrypted for further security, although this is optional.

c. Flexibility: JWTs are versatile and can be used for more than just authentication. They can carry arbitrary data, making them ideal for sharing user-related information across microservices.

d. Cross-domain compatibility: JWTs can be easily transmitted via URLs or in the header of an HTTP request, making them suitable for single sign-on (SSO) scenarios.

4. Common Use Cases

JWTs find application in various scenarios, including:

a. Authentication and Authorization: JWTs are primarily used to authenticate users securely and grant them access to specific resources or actions.

b. Single Sign-On (SSO): In an SSO system, a user logs in once and gains access to multiple applications without the need to log in again for each one. JWTs make this process seamless and secure.

c. Information Exchange: JWTs can be used to share information between different services or microservices in a distributed application architecture.

5. Best Practices for JWT Implementation

a. Secure Key Management: Ensure that the secret used for signing the JWTs is adequately protected. Consider using asymmetric algorithms for enhanced security.

b. Token Expiration: Set a reasonably short expiration time for JWTs to minimize the window of vulnerability.

c. Avoid Sensitive Data: Refrain from storing sensitive information in the payload, as JWTs are not encrypted and can be decoded easily.

d. Token Revocation: In certain cases, like a compromised token, you might need to implement a token revocation mechanism to invalidate JWTs before their expiration.

Conclusion

JSON Web Tokens have become a cornerstone of modern web development, providing a secure and efficient means of authentication and data exchange. By understanding how JWTs work and following best practices, developers can implement robust and scalable authentication solutions for their applications. As we continue to witness advancements in web technologies, JWTs will undoubtedly remain an essential tool for ensuring the integrity and security of our online experiences.