Preparing for System Design Interview

Hey there, welcome to "Continuous Improvement," the podcast where we explore strategies and techniques for personal and professional growth. I'm your host, Victor, and in today's episode, we're diving into an essential topic for software engineers and developers: system design interviews.

System design interviews can be a bit daunting, but with the right preparation and approach, you can excel and land your dream job. In this episode, we'll provide you with a comprehensive guide on how to prepare for a system design interview successfully.

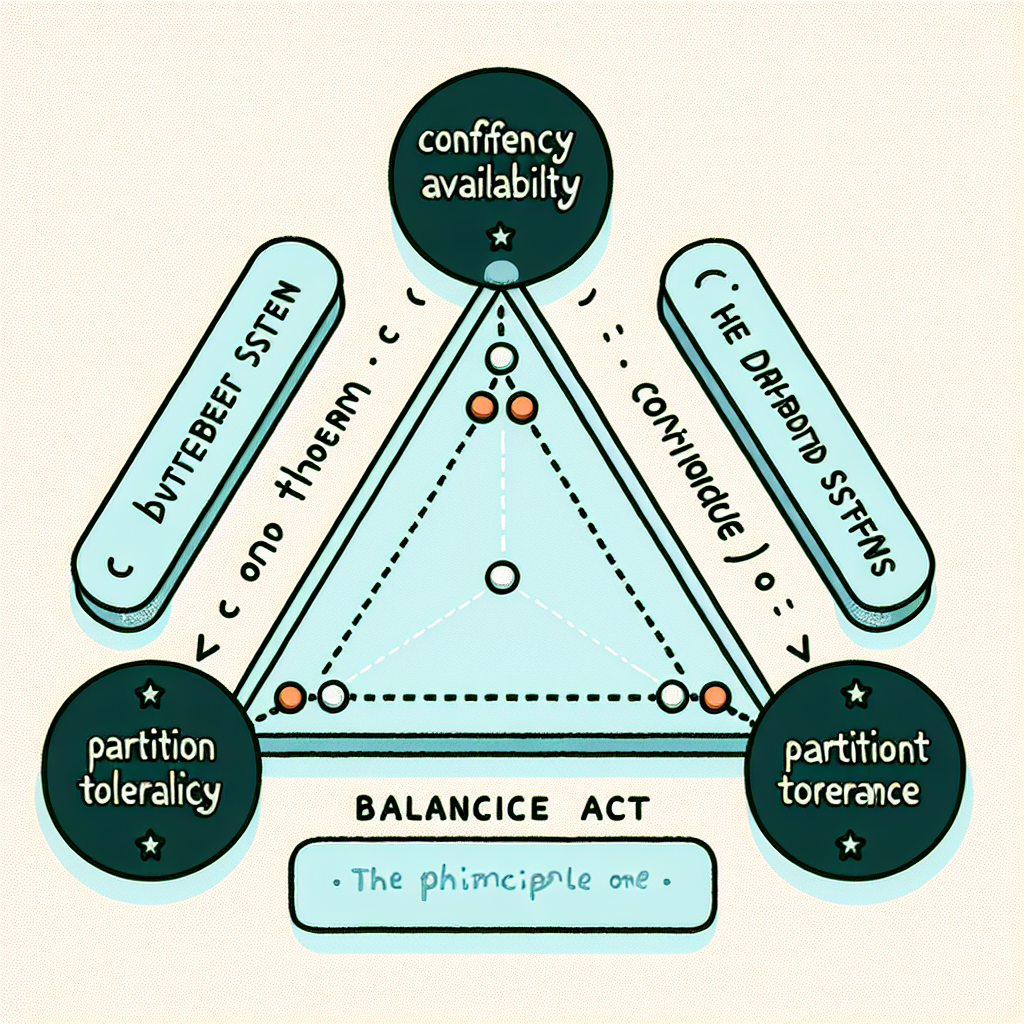

Let's start with the fundamentals. To excel in a system design interview, you need a solid understanding of concepts like distributed systems, networking, databases, caching, scalability, and load balancing. It's crucial to be familiar with the pros and cons of different technologies and their appropriate use cases.

Moving on, studying real-world systems is a great way to gain practical knowledge. Dive into popular architectures like Twitter, Facebook, Netflix, and Google. Understand how these systems handle millions of users, scale their infrastructure, and tackle common challenges. Analyze the trade-offs they make and the techniques they employ for high availability, fault tolerance, and low latency.

Next, it's essential to learn system design patterns. These serve as building blocks for designing scalable systems. Familiarize yourself with patterns like layered architecture, microservices, event-driven architecture, caching, sharding, and replication. Understanding these patterns will help you design robust and scalable systems during the interview.

Now, let's talk about practice. Regularly engage in whiteboard design sessions to simulate the interview environment. Start by selecting a problem statement and brainstorming a high-level design. Focus on scalability, fault tolerance, and performance optimization. Break down the problem into modules, identify potential bottlenecks, and propose appropriate solutions. Don't forget to use diagrams and code snippets to explain your design. Practicing regularly will enhance your problem-solving skills and boost your confidence during the actual interview.

Additionally, reviewing system design case studies can provide valuable insights into real-world design challenges. There are numerous resources available, such as books and online platforms, that offer case studies and solutions. Analyze these case studies, understand the design choices, and think critically about alternative approaches. This exercise will improve your ability to evaluate trade-offs and make informed design decisions.

Collaboration is another powerful tool for mastering system design interviews. Work on design projects with peers and engage in group discussions. Designing systems together exposes you to diverse perspectives and helps you learn from others. Consider participating in online coding communities or joining study groups dedicated to system design interview preparation.

Lastly, seeking feedback is crucial for improvement. After practicing system design interviews, don't hesitate to ask for feedback from experienced engineers or interviewers. They can provide valuable insights into areas where you can enhance your designs, identify blind spots, and offer suggestions for improvement. Incorporate this feedback into your preparation process and iterate on your designs.

To wrap up, preparing for a system design interview requires a combination of theoretical knowledge, practical understanding, and hands-on experience. Remember to approach system design interviews with a logical mindset, focus on scalability and performance, and demonstrate excellent communication skills. With dedication, practice, and the right mindset, you can master system design interviews and advance your career as a software engineer.

That's all for today's episode of "Continuous Improvement." I hope you found these tips helpful as you prepare for your system design interviews. Stay tuned for more episodes where we explore different aspects of personal and professional growth. I'm your host, Victor, signing off. See you next time!