Understanding Data Governance in the Digital Age

In today's rapidly evolving digital landscape, data has emerged as a critical asset for organizations. However, managing this asset effectively requires a structured approach, which is where data governance plays a crucial role. Data governance is the process by which organizations define the authority, control, and accountability over the management of data assets. As businesses increasingly rely on data to drive decision-making, ensuring proper governance becomes essential for achieving operational excellence and regulatory compliance.

What is Data Governance?

At its core, data governance is about defining decision rights and accountability frameworks to ensure that data is valued, created, consumed, and controlled appropriately. According to Gartner, data governance specifies decision rights and accountability to ensure proper data behavior, while DAMA defines it as the exercise of authority and control over data management through shared decision-making processes.

Informatica highlights that data governance also involves defining organizational structures, policies, and metrics that govern the entire data lifecycle. This end-to-end approach ensures data integrity, consistency, and availability, enabling businesses to leverage their data assets fully.

Key Principles of Data Governance

To implement an effective data governance strategy, organizations must adhere to several guiding principles:

-

Data as an Asset: Data is a critical business asset, and organizations must treat it with the same care and consideration as any other valuable resource. This requires clear strategies, decision-making processes, and innovation to maximize its value.

-

Data Stewardship: Everyone within the organization has a responsibility toward data governance. Effective stewardship ensures data is handled with care and is available where needed.

-

Data Quality: Data must be accurate, consistent, and relevant to business needs. The principle of "Right the First Time, Every Time" emphasizes the importance of maintaining data quality throughout its lifecycle.

-

Data Compliance: Organizations must ensure that data handling practices comply with relevant laws and regulations, including data privacy, security, and retention policies.

-

Data Security: Protecting data from unauthorized access, breaches, and other security risks is a fundamental aspect of data governance. This requires robust security protocols and continuous monitoring to safeguard data 24/7.

-

Data Sharing and Accessibility: Data governance encourages the sharing of data across departments to maximize its value. However, data sharing should be governed by strict access controls to ensure only authorized users can access sensitive information.

Data Governance Frameworks

To formalize data governance practices, organizations can adopt established frameworks, such as:

- DGI Data Governance Framework

- CMMI (Capability Maturity Assessment Model)

- DAMA/DMBOK (Data Management Body of Knowledge)

- EDM Council-DCAM (Data Capability Assessment Model)

These frameworks provide structured approaches to assess and improve data management practices. By following these frameworks, organizations can align their data governance efforts with industry best practices and ensure continuous improvement.

The Data Lifecycle

Data governance is not just about setting policies; it extends to managing data throughout its entire lifecycle. From creation and collection to storage, usage, and eventual disposal, data must be handled with care at each stage.

-

Data Creation: This includes data entry, acquisition, and capture through various processes.

-

Data Storage: Data must be cleansed, classified, and stored securely to ensure its availability and integrity.

-

Data Usage: Organizations must ensure that data is used ethically and legally, with proper audit trails to track modifications and access.

-

Data Archival and Disposal: When data is no longer needed, it should be archived or disposed of in a manner that complies with organizational policies and regulatory requirements.

Challenges and Pitfalls in Data Governance

Implementing a successful data governance strategy is not without challenges. Common pitfalls include:

- Lack of Leadership Commitment: Data governance requires support from top-level executives to succeed.

- Failure to Link Governance to Business Goals: Governance efforts should be directly tied to business objectives to demonstrate value.

- Overemphasis on Monitoring: While monitoring is important, organizations should also focus on data quality improvement.

- Technology Reliance: Technology alone cannot solve governance issues—effective governance requires a balance of people, processes, and technology.

Conclusion

Data governance is a critical aspect of managing data in the digital age. By establishing clear policies, accountability frameworks, and stewardship responsibilities, organizations can ensure that their data assets are managed effectively, ensuring data quality, security, and compliance. With a strong governance framework in place, businesses can unlock the full potential of their data, driving innovation and growth while minimizing risks.

This comprehensive approach to data governance ensures that data is treated as a valuable asset, guiding organizations to use it responsibly and strategically for long-term success.

各位女士、先生,歡迎返嚟我哋嘅頻道!今日,我好開心同大家分享一下來自吉井雅之嗰本超有改變力嘅書《最強習慣養成 - 3個月╳71個新觀點 打造更好嘅自己》嘅改變人生嘅洞見。

各位女士、先生,歡迎返嚟我哋嘅頻道!今日,我好開心同大家分享一下來自吉井雅之嗰本超有改變力嘅書《最強習慣養成 - 3個月╳71個新觀點 打造更好嘅自己》嘅改變人生嘅洞見。

大家好!歡迎嚟到呢個探討投資、金融同自我成長嘅頻道。今日我哋有個好exciting嘅主題,係源自Howard Marks嗰本備受讚譽嘅書《投資最緊要嘅事:深思投資者嘅非常識》。呢本書影響好深,就連Warren Buffett都讀咗兩次!

大家好!歡迎嚟到呢個探討投資、金融同自我成長嘅頻道。今日我哋有個好exciting嘅主題,係源自Howard Marks嗰本備受讚譽嘅書《投資最緊要嘅事:深思投資者嘅非常識》。呢本書影響好深,就連Warren Buffett都讀咗兩次!

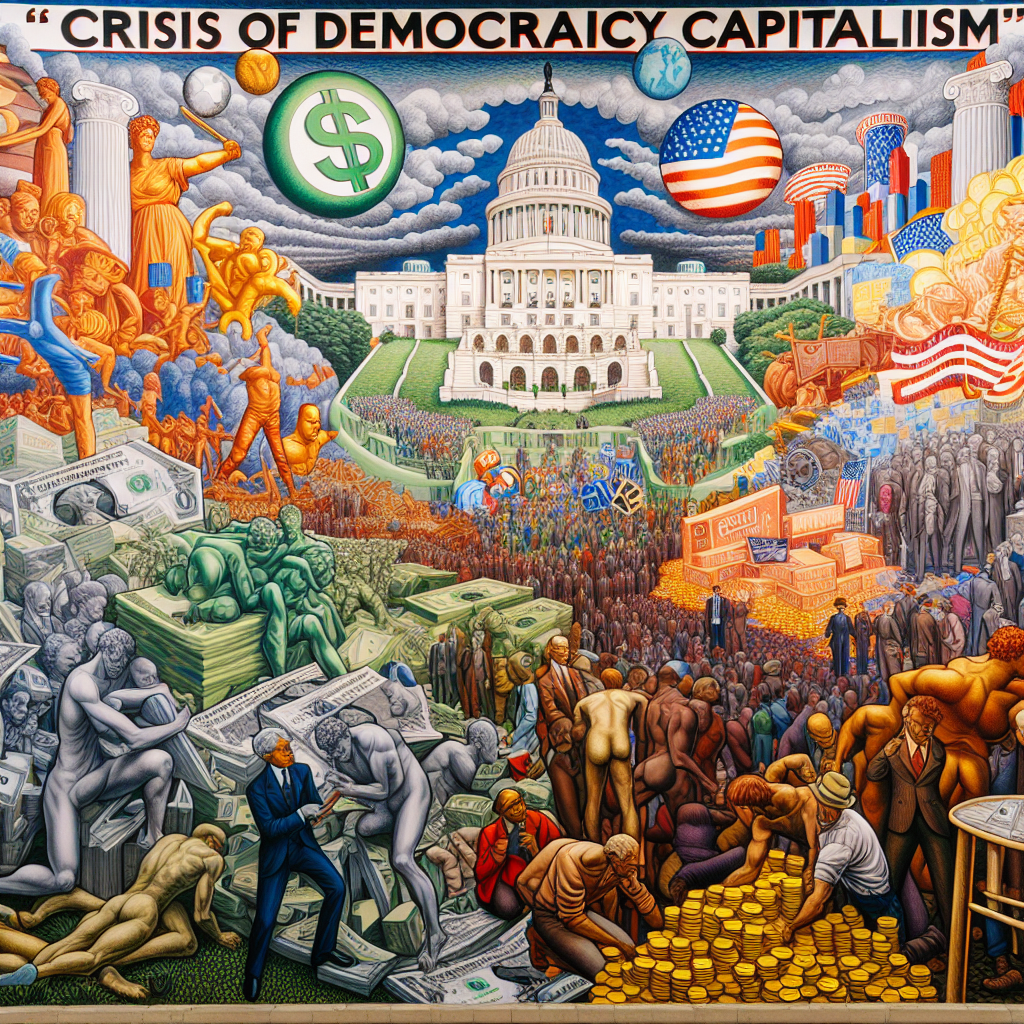

嘿,大家好!歡迎返嚟我哋嘅頻道!今日我哋會深入探討一個好關鍵同埋好及時嘅話題——民主同資本主義之間嘅關係。我哋會深入剖析馬丁·沃爾夫嗰本發人深省嘅書《民主資本主義的危機》裏面啲引人入勝嘅觀點。準備好,呢個係你唔想錯過嘅開眼界話題!

嘿,大家好!歡迎返嚟我哋嘅頻道!今日我哋會深入探討一個好關鍵同埋好及時嘅話題——民主同資本主義之間嘅關係。我哋會深入剖析馬丁·沃爾夫嗰本發人深省嘅書《民主資本主義的危機》裏面啲引人入勝嘅觀點。準備好,呢個係你唔想錯過嘅開眼界話題!